The Hardware Lottery

Introduction

Hardware, systems and algorithms research communities have historically had different incentive structures and fluctuating motivation to engage with each other explicitly. This historical treatment is odd given that hardware and software have frequently determined which research ideas succeed (and fail).

This essay introduces the term hardware lottery to describe when a research idea wins because it is suited to the available software and hardware and not because the idea is universally superior to alternative research directions. History tells us that hardware lotteries can obfuscate research progress by casting successful ideas as failures and can delay signaling that some research directions are far more promising than others.

These lessons are particularly salient as we move into a new era of

closer collaboration between hardware, software and machine learning

research communities. After decades of treating hardware, software and

algorithms as separate choices, the catalysts for closer collaboration

include changing hardware economics

Closer collaboration has centered on a wave of new generation hardware that is "domain specific" to optimize for commercial use cases of deep neural networks. While domain specialization creates important efficiency gains, it arguably makes it more even more costly to stray off of the beaten path of research ideas. While deep neural networks have clear commercial use cases, there are early warning signs that the path to true artificial intelligence may require an entirely different combination of algorithm, hardware and software.

This essay begins by acknowledging a crucial paradox: machine learning researchers mostly ignore hardware despite the role it plays in determining what ideas succeed. What has incentivized the development of software, hardware and algorithms in isolation? What follows is part position paper, part historical review that attempts to answer the question, "How does tooling choose which research ideas succeed and fail, and what does the future hold?"

Separate Tribes

It is not a bad description of man to describe him as a tool making animal.

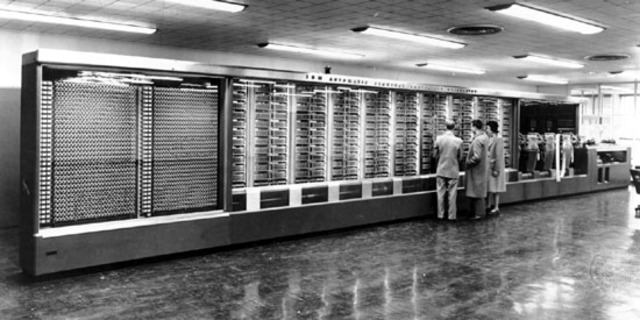

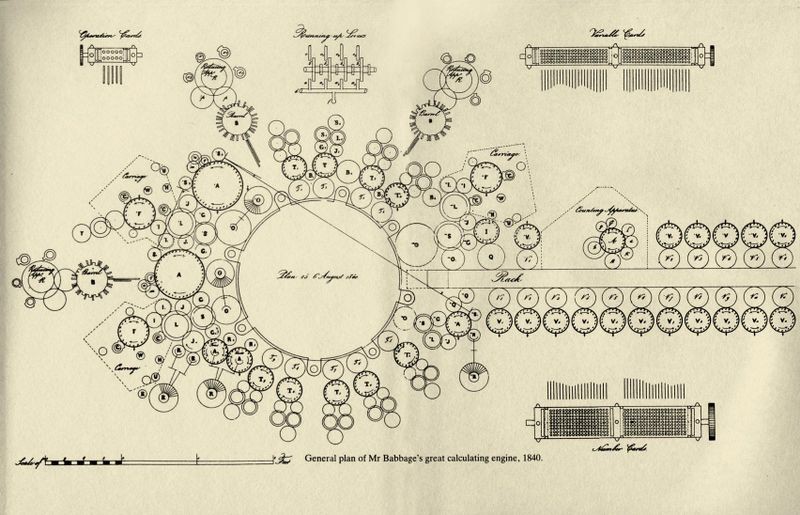

For the creators of the first computers the program was the machine.

Early machines were single use and were not expected to be

re-purposed for a new task because of both the cost of the

electronics and a lack of cross-purpose software. Charles Babbage’s

difference machine was intended solely to compute polynomial

functions (1817)

The specialization of these early computers was out of necessity and

not because computer architects thought one-off customized hardware

was intrinsically better. However, it is worth pointing out that our

own intelligence is both algorithm and machine. We do not inhabit

multiple brains over the course of our lifetime. Instead, the notion

of human intelligence is intrinsically associated with the physical

1400g of brain tissue and the patterns of connectivity between an

estimated 85 billion neurons in your head

Today, in contrast to the necessary specialization in the very early days of computing, machine learning researchers tend to think of hardware, software and algorithm as three separate choices. This is largely due to a period in computer science history that radically changed the type of hardware that was made and incentivized hardware, software and machine learning research communities to evolve in isolation.

The general purpose computer era crystalized in 1969, when opinion

piece by a young engineer called Gordan Moore appeared in

Electronics magazine with the apt title “Cramming more components

onto circuit boards”

Moore’s law combined with Dennard scaling

The emphasis shifted to universal processors which could solve a

myriad of different tasks. Why experiment on more specialized

hardware designs for an uncertain reward when Moore’s law allowed

chip makers to lock in predictable profit margins? The few attempts

to deviate and produce specialized supercomputers for research were

financially unsustainable and short lived

Treating the choice of hardware, software and algorithm as

independent has persisted until recently. It is expensive to explore

new types of hardware, both in terms of time and capital required.

Producing a next generation chip typically costs $30-80 million

dollars and takes 2-3 years to develop

In the absence of any lever with which to influence hardware development, machine learning researchers rationally began to treat the hardware as a sunk cost to work around rather than something fluid that could be shaped. However, just because we have abstracted away hardware doesn’t mean that it has disappeared. Early computer science history tells us there are many hardware lotteries where the choice of hardware and software has determined which ideas succeeded (and which failed).

The Hardware Lottery

I suppose it is tempting, if the only tool you have is a hammer, to treat everything as if it were a nail.

The first sentence of Anna Karenina by Tolstoy reads “Happy

families are all alike, every unhappy family is unhappy in it’s

own way.”

Despite our preference to believe algorithms succeed or fail in isolation, history tells us that most computer science breakthroughs follow the Anna Kerenina principle. Successful breakthroughs are often distinguished from failures by benefiting from multiple criteria aligning surreptitiously. For computer science research, this often depends upon winning what this essay terms the hardware lottery — avoiding possible points of failure in downstream hardware and software choices.

An early example of a hardware lottery is the analytical machine

(1837). Charles Babbage was a computer pioneer who designed a

machine that (at least in theory) could be programmed to solve any

type of computation. His analytical engine was never built in part

because he had difficulty fabricating parts with the correct

precision

As noted in the TV show Silicon Valley, often “being too early is the same as being wrong”. When Babbage passed away in 1871, there was no continuous path between his ideas and modern day computing. The concept of a stored program, modifiable code, memory and conditional branching were rediscovered a century later because the right tools existed to empirically show that the idea worked.

The Lost Decades

Perhaps the most salient example of the damage caused by not

winning the hardware lottery is the delayed recognition of deep

neural networks as a promising direction of research. Most of the

algorithmic components to make deep neural networks work had

already been in place for a few decades: backpropagation (1963

This gap between algorithmic advances and empirical success is in

large part due to incompatible hardware. During the general

purpose computing era, hardware like CPUs were heavily favored and

widely available. CPUs are very good at executing any set of

complex instructions but often incur high memory costs because of

the need to cache intermediate results and process one instruction

at a time.

The von Neumann bottleneck was terribly ill-suited to matrix

multiplies, a core component of deep neural network architectures.

Thus, training on CPUs quickly exhausted memory bandwidth and it

simply wasn’t possible to train deep neural networks with multiple

layers. The need for hardware that was massively parallel was

pointed out as far back as the early 1980s in a series of essays

titled “Parallel Models of Associative Memory.”

In the late 1980s/90s, the idea of specialized hardware for neural

networks had passed the novelty stage

It would take a hardware fluke in the early 2000s, a full four

decades after the first paper about backpropagation was published,

for the insight about massive parallelism to be operationalized in

a useful way for connectionist deep neural networks. Many

inventions are re-purposed for means unintended by their

designers. Edison’s phonograph was never intended to play music.

He envisioned it as preserving the last words of dying people or

teaching spelling. In fact, he was disappointed by its use playing

popular music as he thought this was too “base” an application of

his invention

A graphical processing unit (GPU) was originally introduced in the

1970s as a specialized accelerator for video games and developing

graphics for movies and animation. In the 2000s, like Edison’s

phonograph, GPUs were re-purposed for an entirely unimagined use

case -- to train deep neural networks

This higher number of floating operation points per second (FLOPS)

combined with clever distribution of training between GPUs

unblocked the training of deeper networks. The number of layers in

a network turned out to be the key. Performance on ImageNet jumped

with ever deeper networks in 2011

Software Lotteries

Software also plays a role in deciding which research ideas win

and lose. Prolog and LISP were two languages heavily favored until

the mid-90’s in the AI community. For most of this period,

students of AI were expected to actively master one or both of

these languages

For researchers who wanted to work on connectionist ideas like

deep neural networks there was not a clearly suited language of

choice until the emergence of Matlab in 1992

Where there is a loser, there is also a winner. From the 1960s

through the mid 80s, most mainstream research was focused on

symbolic approaches to AI

The Persistence of the Hardware Lottery

Today, there is renewed interest in joint collaboration between

hardware, software and machine learning communities. We are

experiencing a second pendulum swing back to specialized hardware.

The catalysts include changing hardware economics prompted by the

end of Moore’s law and the breakdown of dennard scaling

The end of Moore’s law means we are not guaranteed more compute,

hardware will have to earn it. To improve efficiency, there is a

shift from task agnostic hardware like CPUs to domain specialized

hardware that tailor the design to make certain tasks more

efficient. The first examples of domain specialized hardware

released over the last few years -- TPUs

Closer collaboration between hardware and research communities

will undoubtedly continue to make the training and deployment of

deep neural networks more efficient. For example, unstructured

pruning

While these compression techniques are currently not supported,

many clever hardware architects are currently thinking about how

to solve for this. It is a reasonable prediction that the next few

generations of chips or specialized kernels will correct for

present hardware bias against these techniques

In many ways, hardware is catching up to the present state of

machine learning research. Hardware is only economically viable if

the lifetime of the use case lasts more than three years

There is still a separate question of whether hardware innovation is versatile enough to unlock or keep pace with entirely new machine learning research directions. It is difficult to answer this question because data points here are limited -- it is hard to model the counterfactual of would this idea succeed given different hardware. However, despite the inherent challenge of this task, there is already compelling evidence that domain specialized hardware makes it more costly for research ideas that stray outside of the mainstream to succeed.

In 2019, a paper was published called “Machine learning is stuck

in a rut.”

Whether or not you agree that capsule networks are the future of computer vision, the authors say something interesting about the difficulty of trying to train a new type of image classification architecture on domain specialized hardware. Hardware design has prioritized delivering on commercial use cases, while built-in flexibility to accommodate the next generation of research ideas remains a distant secondary consideration.

While specialization makes deep neural networks more efficient, it also makes it far more costly to stray from accepted building blocks. It prompts the question of how much researchers will implicitly overfit to ideas that operationalize well on available hardware rather than take a risk on ideas that are not currently feasible? What are the failures we still don’t have the hardware to see as a success?

The Likelyhood of Future Hardware Lotteries

What we have before us are some breathtaking opportunities disguised as insoluble problems.

It is an ongoing, open debate within the machine learning

community as to how much future algorithms will stray from models

like deep neural networks

Betting heavily on specialized hardware makes sense if you think that future breakthroughs depend upon pairing deep neural networks with ever increasing amounts of data and computation. Several major research labs are making this bet, engaging in a “bigger is better” race in the number of model parameters and collecting ever more expansive datasets. However, it is unclear whether this is sustainable. An algorithms scalability is often thought of as the performance gradient relative to the available resources. Given more resources, how does performance increase?

For many subfields, we are now in a regime where the rate of

return for additional parameters is decreasing

Perhaps more troubling is how far away we are from the type of

intelligence humans demonstrate. Human brains despite their

complexity remain extremely energy efficient. Our brain has over

85 billion neurons but runs on the energy equivalent of an

electric shaver

Biological examples of intelligence differ from deep neural

networks in enough ways to suggest it is a risky bet to say that

deep neural networks are the only way forward. While general

purpose algorithms like deep neural networks rely on global

updates in order to learn a useful representation, our brains do

not. Our own intelligence relies on decentralized local updates

which surface a global signal in ways that are still not well

understood

In addition, our brains are able to learn efficient

representations from far fewer labelled examples than deep neural

networks

Humans have highly optimized and specific pathways developed in

our biological hardware for different tasks

The point of these examples is not to convince you that deep neural networks are not the way forward. But, rather that there are clearly other models of intelligence which suggest it may not be the only way. It is possible that the next breakthrough will require a fundamentally different way of modelling the world with a different combination of hardware, software and algorithm. We may very well be in the midst of a present day hardware lottery.

The Way Forward

Any machine coding system should be judged quite largely from the point of view of how easy it is for the operator to obtain results.

Scientific progress occurs when there is a confluence of factors which allows the scientist to overcome the "stickyness" of the existing paradigm. The speed at which paradigm shifts have happened in AI research have been disproportionately determined by the degree of alignment between hardware, software and algorithm. Thus, any attempt to avoid hardware lotteries must be concerned with making it cheaper and less time-consuming to explore different hardware-software-algorithm combinations.

This is easier said than done. Expanding the search space of

possible hardware-software-algorithm combinations is a

formidable goal. It is expensive to explore new types of

hardware, both in terms of time and capital required. Producing

a next generation chip typically costs $30-$80 million dollars

and 2-3 years to develop

Experiments using reinforcement learning to optimize chip

placement may help decrease cost

In the short to medium term hardware development is likely to

remain expensive and prolonged. The cost of producing hardware

is important because it determines the amount of risk and

experimentation hardware developers are willing to tolerate.

Investment in hardware tailored to deep neural networks is

assured because neural networks are a cornerstone of enough

commercial use cases. The widespread profitability of deep

learning has spurred a healthy ecosystem of hardware startups

that aim to further accelerate deep neural networks

The bottleneck will continue to be funding hardware for use

cases that are not immediately commercially viable. These more

risky directions include biological hardware

Lessons from previous hardware lotteries suggest that investment

must be sustained and come from both private and public funding

programs. There is a slow awakening of public interest in

providing such dedicated resources, such as the 2018 DARPA

Electronics Resurgence Initiative which has committed to $1.5

billion dollars in funding for microelectronic technology

research

The Software Revolution

An interim goal is to provide better feedback loops to

researchers about how our algorithms interact with the

hardware we do have. Machine learning researchers do not spend

much time talking about how hardware chooses which ideas

succeed and which fail. This is primarily because it is hard

to quantify the cost of being concerned. At present, there are

no easy and cheap to use interfaces to benchmark algorithm

performance against multiple types of hardware at once. There

are frustrating differences in the subset of software

operations supported on different types of hardware which

prevent the portability of algorithms across hardware types

These challenges are compounded by an ever more formidable and

heterogeneous hardware landscape

One way to mitigate this need for specialized software

expertise is focusing on the development of domain-specific

languages which are designed to focus on a narrow domain.

While you give up expressive power, domain-specific languages

permit greater portability across different types of hardware.

It allow developers to focus on the intent of the code without

worrying about implementation details

The difficulty of both these approaches is that if successful, this further abstracts humans from the details of the implementation. In parallel, we need better profiling tools to allow researchers to have a more informed opinion about how hardware and software should evolve. Ideally, software could even surface recommendations about what type of hardware to use given the configuration of an algorithm. Registering what differs from our expectations remains a key catalyst in driving new scientific discoveries.

Software needs to do more work, but it is also well positioned

to do so. We have neglected efficient software throughout the

era of Moore's law, trusting that predictable gains in compute

would compensate for inefficiencies in the software stack.

This means there are many low hanging fruit as we begin to

optimize for more efficient code

Parting Thoughts

George Gilder, an American investor, powerfully described the

computer chip as “inscribing worlds on grains of sand”

About the Author

Sara Hooker is researcher at Google Brain working on training models that fulfill multiple desired criteria -- high performance, interpretable, compact and robust. She is interested in the intersection between hardware, software and algorithms. Correspondance about this essay can be sent to shooker@google.com.

Acknowledgments

Thank you to my wonderful colleagues and peers who took the time to provide valuable feedback on earlier drafts of this essay. In particular, I would like to acknowledge the invaluable input of Utku Evci, Amanda Su, Chip Huyen, Eric Jang, Simon Kornblith, Melissa Fabros, Erich Elsen, Sean Mcpherson, Brian Spiering, Stephanie Sher, Pete Warden, Samy Bengio, Jacques Pienaar, Raziel Alvarez, Laura Florescu, Cliff Young, Dan Hurt, Kevin Swersky, Carles Gelada. Thanks for the institutional support and encouragement of Natacha Mainville, Hugo Larochelle, Aaron Courville and of course Alexander Popper.

Citation

@ARTICLE{2020shooker,

author = {{Hooker}, Sara},

title = "{The Hardware Lottery}",

year = 2020,

url = {https://arxiv.org/abs/1911.05248}

}

.jpg?v=1586843526885)